No. It’s like microwaving a TV dinner and saying you cooked.

There are levels to everything. People have a very shallow understanding of how these tools work.

Some ai art is low effort.

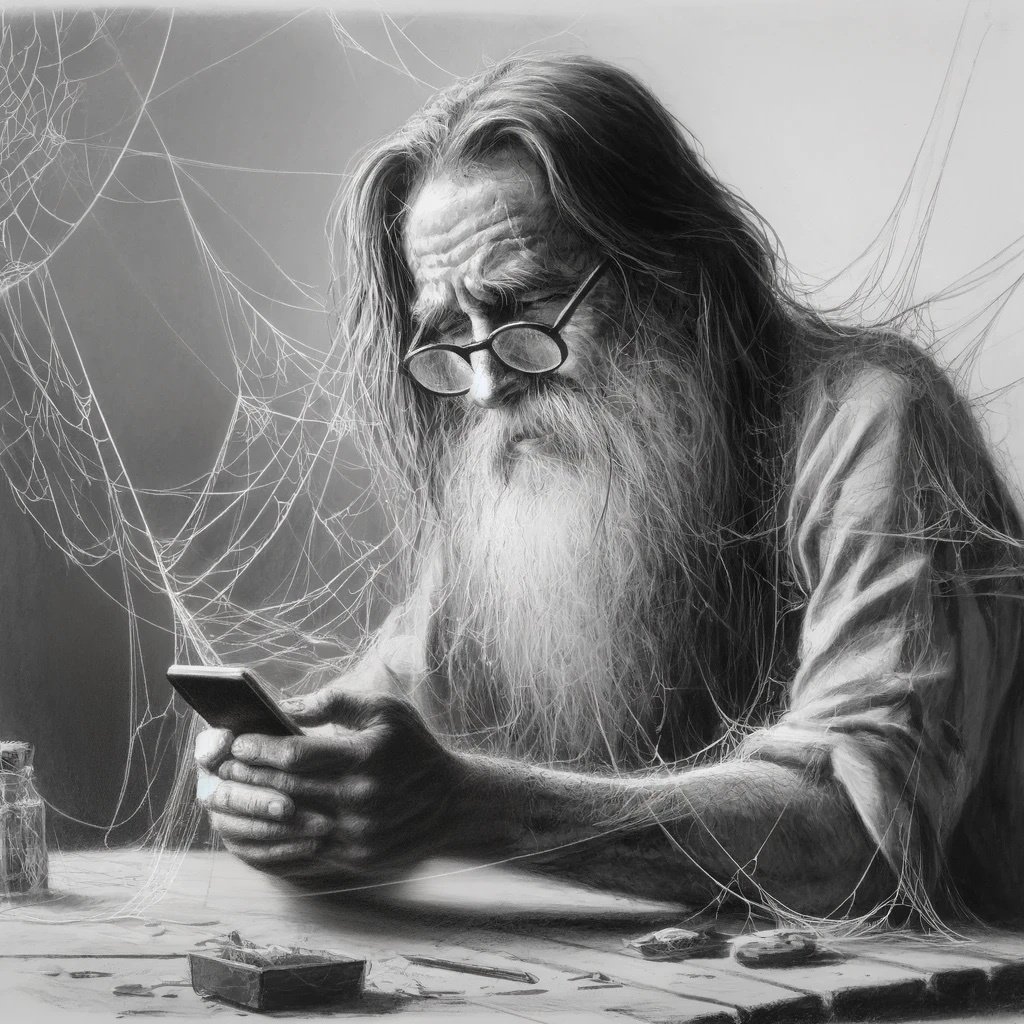

Some ai art is extremely involved.It can often take longer to get what you want out of it than it would’ve to have just drawn it. I’ve spent 8 or 9 hours fiddling with inputs and settings for a piece and it still didn’t come out as good as it would have if I had commissioned an artist.

I’ve been using it to get “close” then using it as a reference when commissioning things

Yes. I also think that’s how it is. If you want to generate something meaningful, something that contributes something deep, it is quite a lot of effort. You need to do the prompt engineering, generate a few hundred images. Skim through them and find the most promising ones, then edit them. Maybe combine more than one or put it back into the AI to get the right amount of limbs and fingers. And the lighting, background etc right.

You can just do one-shot, generate anything and upload it to the internet. But it wouldn’t be of the same value. But it works like this for anything. I can take a photo of something. Somebody else can have their photos printed on a magazine or do an exhibition. It’s a difference in skills and effort. Taking artistic photographs probably also takes some time and effort. You can ask the same question with that. Are photographs art? It depends. For other meanings of ‘OC’: Sure. The generated output is unique and you created it.

That’s a great analogy. TV dinners, while presentable at first glance, are both low effort and not that great.

I generally consider “OC” to mean specifically that it’s original - you didn’t get it from someplace else, so broadly yes if you’re the one who had it generated.

But if it’s a community for art or photography generally, I don’t think AI art belongs there - the skills and talent required are just too different. I love AI art communities, I just think it’s a separate thing.

But following that logic “OC” would mean you didn’t get it from “someplace else”, but since AI is trained by looking pieces made by other people to learn, it technically did get it from someplace else.

by looking pieces made by other people to learn

Humans do it it’s inspiration.

Computers do it it’s theft.Unironically yes

I don’t understand people like you. Seems to me like exactly the ones who destroyed machines few centuries ago because they would take our jobs. Turns out they didn’t. And AI will succeed as well and it won’t put as all into unemployment.

deleted by creator

Humans also look at other peoples art to learn, they might also really like someone else’s style and want to produce works in that style themselves, does this make them AI? Humans have been copying and remixing off of each other since the beginning of time.

The fact that a lot of movie pitches are boiled down to “thing A, meets thing B” and the person listening is able to autocomplete that “prompt” well enough to decide to invest in the idea or not, is the clearest evidence of that, I personally don’t think that just because humans are slower and we aren’t able to reproduce things perfectly even though that’s what we are trying to do sometimes, means that we somehow have a monopoly on this thing called creativity or originality.

You could maybe argue that it comes down to intentionality, and that because the AI isn’t “conscious” yet, it isn’t making the decision to create the artwork on its own or making the decision to accept the art commission via the prompt on its own. Then it can’t have truely created the art the same way photoshop didn’t create the art.

But I’ve always found the argument of “it’s not actually making anything because it had to look at all these other works by these other people first” a little disingenuous because it ignores the way humans learn and experience things since the day we are born.

Then everything that is created by a real person is not OC either. I don’t know why people think that we’re somehow special.

You could make that argument about humans who look at other stories of art before creating one of their own, influenced by the others then.

Let’s say I give an AI a prompt to create a picture of a cute puppy of about six weeks old, but as large as a building, and instead of paws, each leg ended in a living rubber ducky the size of a car, and the puppy is squatting to poop, but instead of poop coming out, it’s the great men and women of science like Mendel, Pasteur, Nobel, Currie, Einstein, and others, all landing in a pile. Oh, and if like the picture to be in the style of Renoir. I think we could agree that the resulting picture wouldn’t be a copy of any existing one. I think I’d feel justified in calling it original content. I’ve seen a lot of hand painted works that were more derivative of other work, but that people all agree is OC.

Or someone who studies art, has a look at art by other famous painters and then becomes a painter themselves.

It depends on the context for me. As a meme base or to make a joke and you don’t have the skills? Sure. In an art community? No.

AI art is not OC. It cannot be.

Why would human art be then?

Because humans can have new ideas.

Those ‘new’ ideas can be inputted as a prompt into an AI image generator. Would the output of that satisfy your criteria for OC?

No. Every parameter in the LLM, not just the prompt, is or was a new human idea at some point.

And would you say that an idea formed from the combination of multiple old human ideas is not original? If the influence of an existing idea disqualifies it from being original then very little could be considered original. If something additional to existing ideas is needed for originality then that what is that thing which is beyond the capability of an AI?

Personally, I would argue that any new combination of existing ideas is inherently original (i.e. a fresh perspective.)

Talking specifically about image generators (rather than LLMs) which are trained on billions of images - some of which would be widely considered as artwork (old ideas?) and others documentary photographs.

How new is a new idea, if it must be formulated in an existing language?

Language is itself invented by humans.

Not by me though, or you. Can’t have new ideas 😞

“Humans can have new ideas” isn’t “humans only have new ideas”.

Those are not new ideas. Those are based on persons experiences up to that point. There is nothing magical in human brain that we cannot eventually implement in AI.

How so? What is it that makes art OC that cannot be applied to AI created art? I think it would take an extremely narrow definition which would also exclude a significant amount of human created art.

No

“Original Content”.

Is it content? Yes.

Is it original? That depends on the context. What do you ask about, in what context? Where is it placed? Which AI? How was it trained? How does it replicate?

If someone generates an image, it is original in that narrow context - between them and the AI.

Is the AI producing originals, original interpretations, original replications, or only transforming other content? I don’t think you can make a general statement on that. It’s too broad, unspecific of a question.

You absolutely can make a general statement. Humans don’t make original content if you don’t think AIs do. The process is basically the same. A human learns to make art, and specific styles, and then produces something from that library of training. An AI does the same thing.

People saying an AI doesn’t create art from a human prompt don’t understand how humans work.

Large language models (what marketing departments are calling “AI”) cannot synthesize new ideas or knowledge.

Don’t know what you are talking about. GPT-4 absolutely can write new stories. What differentiates that from a new idea?

I can’t tell whether you’re saying I don’t know what I’m talking about, or you don’t know what I’m talking about.

Doesn’t matter.

When in conversation the “AI can’t have creativity/new ideas etc” argument comes up, I often get the impression it’s a protective reaction rather than a reflected conclusion.

Physician, heal thyself, then.

First off all, yes they can for all practical purposes. Or, alternately, neither can humans. So the point is academic. There is little difference between the end result from an AI and a human taken at random.

Secondly, LLMs aren’t really what people are talking about when they talk about AI art.

First off all, yes they can for all practical purposes. Or, alternately, neither can humans. So the point is academic. There is little difference between the end result from an AI and a human taken at random.

Not even the AI companies’ marketing departments go that far.

No.

Nothing is oc.

There is a book “steal like an artist” by Austin Kleon that addresses this idea. Real short read and interesting visuals.

As for AI specifically. Ai image generation tools are just that, a tool. Using them doesn’t immediately discredit your work. There is a skillset in getting them to produce your vision. And that vision is the human element not present in the tool alone.

I personally don’t think terribly highly of ai art, but the idea that it’s “just stealing real artists hard work” is absurd. It makes art accessable to people intimidated by other mediums, chill out and let people make shit.

So an AI that is trained on many copyrighted Images from Artists without being asked, and then asking the AI to create from this Artist its drawing style. Is it not a copyright nor a steal?

I mean, weird enough if a person would do that it would be more ok than an AI. But the difference is that you as a human get creative and create an Image, an AI is not really creative, its skill is to recreate this exact image like it would be stored as a file or mix it/change it with thousands of other images.

I have no standpoint in this topic, I can’t agree or disagree.

This is my problem. The tech itself is fine, no one is arguing about training data and making art from trained data.

But the source of all of that data was ripped without artists consent. They did not agree to take part in this. (And no, I don’t think clicking “I Accept” 15 years ago on DeviantArt should count, we had no concept of this back then). Then on top of that people are profiting off of the stolen art.

I’m pretty sure this whole issue has to end either in some catastrophe or the complete abolishon of interlectual property rights. Which I already don’t have any love for so I’m fairly convinced we should see artists and inventors get their needs met and being able to realise their projects as a separate issue from them effectively owning ideas.

Isn’t that a bit like someone faking a painting? Let’s say by Monet? This can be everything from 100% alright to illegal.

In addition to that, there’s also a difference between being inspired by, or copying something.

I think all of that is just a variation of an old and well known problem.

Being inspired on vs copying is what I had in mind when I created my comment. I came to the conclusion that AI can’t be creative and can’t be inspired because it takes a 1:1 copy of the picture and stores it into a weighted neuronal network. Therefore it can also 1:1 recreate the picture and manipulate/change it or combine with other images with patterns that it learned. At the end the picture is stored on a silicon device but instead of a ordered structure its stored in a for us chaotic structure which could easily reassmble it back to the original.

because it takes a 1:1 copy of the picture and stores it

What makes you think that? This is wrong. Sure you can try and train a neuronal network to remember something exactly. But this would waste gigabytes of memory and lots of computing for some photo that you could just store on the smallest thumbdrive as a jpg and clone it with the digital precision, computers are made for. You don’t need a neural net for that. And once you start feeding it the third or fourth photo, the first one will deteriorate and it will become difficult to reproduce each of them exactly. I’m not an expert on machine learning, but i think the fact that floating point arithmetic has a certain, finite precision and we’re talking about statistics and hundreds of thousands to millions of pixels per photo makes it even more difficult to store things exactly.

Actually the way machine learning models work is: It has a look at lots of photos and each time adapts its weights a tiny bit. Nothing gets copied 1:1. A small amount if information is transferred from the item into the weights. And that is the way you want it to work to be useful. It should not memorise each of van gogh’s paintings 1:1 because this wouldn’t allow you to create a new fake van gogh. You want it to understand how van gogh’s style looks. You want it to learn concepts and store more abstract knowledge, that it can then apply to new tasks. I hope i explained this well enough. If machine learning worked the way you described, it would be nothing more than expensive storage. It could reproduce things 1:1 but you obviously can’t tell your thumbdrive or harddisk to create a Mona Lisa in a new, previously unseen way.

Just take for example Stable Diffusion and tell it to recreate the Mona Lisa. Maybe re-genrate a few times. You’ll see it doesn’t have the exact pixel values of the original image and you won’t be able to get a 1:1 copy. If you look at a few outputs, you’ll see it draws it from memory, with some variation. It also reproduces the painting being photographed from slightly different angles and with and without the golden frame around it. Once you tell it to draw it frowning or in anime style, you’ll see that the neural network has learned the names of facial expressions and painting styles, and which one is present in the Mona Lisa. So much that it can even swap them without effort.

And even if neural networks can remember things very precisely… What about people with eidetic memory? What about the painters in the 19th century who painted very photorealistic landscape images or small towns. Do we now say this isn’t original because they portrayed an existing village? No, of course it’s art and we’re happy we get to know exactly how things looked back then.

Well, you mentioned that it could reproduce the imagine 1:1 which is just my entire point. It doesn’t matter what your thumb drive can’t do.

And I guess the main point is that every pixel is used and trained without changes, making it kinda a copyright issue as some images don’t even allow to be used somewhere and edited.

OC can infringe copyrights.

The way you put original content in quotes is weird.

OC as an acronym typically just means something that someone made. In this sense, yeah, if you make something with AI then it’s "your OC’.

Original content used as the words generally means something slightly different and it’s more debatable.

Having used AI art tools there is more creativity involved than people think. When you’re just generating them, sure, there’s less creativity than traditional digital art, of course, but it is not a wholly uncreative process. Take in-painting, you can selectively generate in just some portions of the image. Or sketch and then generate based off of that.

All that said though I don’t think “creativity” is necessary for something to be considered OC. It just needs to have been made by them.

Would you call fan art of well known characters OC? I would.

Nice animated PFP, very fancy.

That’s an interesting question. I haven’t spent very much time thinking about how to define AI art. My immediate thought is that AI art can be OC, but it should also be labeled as such. It’s important to know if a person created the content vs prompting an AI to generate the content. The closest example I can think of is asking someone to paint something for you instead of painting it yourself.

Steam bans games that contain such AI content because they are not near OC. Except you train the AI on only your own Copyrighted Images, which mid journey and various other AI aren’t. They are all trained on copyrighted images without asking.

They accept it when you trained on data you had the right to [train and republish on]. That isn’t limited to only your own content.

Absolutely not

It’s an interesting thing to ponder and my opinion is that like many other things in life something being ‘OC’ is a spectrum rather than a binary thing.

If I apply a B&W filter on an image is that OC? Obviously not

But what if I make an artwork that’s formed by hundreds of smaller artworks, like this example? This definitely deserves the OC tag

AI art is also somewhere in that spectrum and even then it changes depending on how AI was used to make the art. Each person has a different line on the spectrum where things transition from non OC to OC, so the answer to this would be different for everyone.

As someone who has been trying to get my vision for a piece to fruition using AI for months…I absolutely think AI is OC. The argument that it references existing work cracks me up because all of art history is derivatives of what has come before. I do think there is “low effort” pieces, but you get that in other mediums as well such as photography. Also…need I mention Duchamp and the urinal?

Yeah in the same remixing a song is considered original

Mmm yeah like consider daft punk, songs made entirely out of samples from other peoples songs but tweaked and remixed enough to make something that anyone would consider original. I think people arguing essentially “it only counts as music if the songs they are sampling were originally recorded by them” are being a little disingenuous

I really think it comes down to the individual. I personally think that Aldous Huxley’s book Brave New World was likely derived at least partially from the book We by Russian author Yevgeny Zamyatin but both Aldous Huxley and my 10th grade English teacher would disagree. I don’t think it’s wrong to take someone else’s work and add upon it in a way you view beneficial. I view it as a natural evolution if anything and if it gives someone something to enjoy or makes the creative processes a little easier I’m all for it.