Like a privacy based fully open source browser. Wouldnt it be more hackable because every one know the script and is a glopal privacy based gpay alternative possible ? What about targeted hacking is someone using closed source application more better off than someone with ooen source ?

As long as there us incentive to do so, malicious actors will exploit the source code whether it is open or closed…

Making something open source does make it easier for malicious actors, but it also allows honest actors to find and fix exploits before they can be used - something they won’t/can’t do for closed source, meaning you have to rely on in-house devs to review/find/fix everything.

Absolutely, this is a good explanation.

And to add, so many pieces of software share code through shared libraries or systems. Open source means if there is a flaw in one library that is found and fixed, all the software that uses it downstream can benefit.

Closed source, good actors might not even know their software is using flawed older libraries as it’s hidden from view.

Plus open source allows audit of code to ensure the software is what it says it is. There are plenty of examples of commercial closed software that does things deliberately that do not benefit it’s user, but do benefit the company that makes the software.

The track record of open source projects for fixing known vulnerabilities is pretty good. Closed source suppliers, on the other hand, have frequently been caught trying to sweep things under the rug.

deleted by creator

It’s a dual edged sword, everybody can look for vulnerability, it may-help some pirates, but it also means that everyone can volunteer to fix-it. To my understanding, professional security auditor concluded that (at least for big free projects) open-source is safer than closed source because more people fix bugs than exploit them

No more specifically it’s safer because bugs can be found readily.

Yes, this increases your attack surface. But way worse than the easily-found-easily-exploited bug is the bug that is being exploited and you have fuck all idea it’s even there.

Tetra (the digital radio) is a nice example for that. It was ‘secure’ for a long time - or at least we don’t know otherwise, because the majority of issues found when an independent team finally bothered to reverse that thing can be exploited without the operators noticing.

With an open standard people would’ve told them in the 90s already that they’re morons.

Or the exploit has been found but the dev do fuck all to fix it.

FOSS generally puts more pressure on people to write better and safer code, because you know everyone is going to look at it. Even when vulnerabilities are found, they are usually fixed so fast compared to the proprietary side. There are stories of people waiting 6 months for Microsoft to fix a vulnerability, while an Openssh or openssl issue is usually fixed in a few days.

No, absolutely not. Security through obscurity hasn’t worked for decades.

I do not think that ever worked.

No but I sure have dealt with a lot a stupid decisions for years because somebody thought it would and used that as an excuse to be wildly insecure to save time.

It works if working is simply not knowing there are exploits happening.

During the browser war years when M$ forced everyone into using Internet Explorer via their OS monopoly, malware exploded. It was so easy to get a broken system because IE was tightly coupled to the Windows. I remember all of us having to fix our parents computers, then install a ton of anti-virus software to prevent it. Identity theft and tons of other exploits were rampant.

Some people found and published some these bugs, and published them on various sites… and what was microsoft’s response? To sue those sites out of existence, and let the malware keep stacking up. The problem really didn’t go away until Opera and Firefox came along.

Lots of good and relevant reading here:

The fact that most hacked software is closed source (i.e. Windows and most Windows tools) proves that open source software is not lees secure.

Not really. That windows is targeted more is not to do with it being closed source or necessarily less secure; it is ubiquitous and so from a hacker/malware point of view it’s the best chance of getting a financial reward from their efforts.

However it being closed source makes it harder to identify and patch the holes. We only come across those holes either because a good actor has taken the time to find them (which is hard work) or a bad actor has started exploiting the flaws and been caught - which is terrible as the horse has already bolted, and often stumbled across after damage has been done

Open source does not magically fix that problem, it just puts the good and bad actors on a more level open playing field. Software can be secure with open code as security is about good design rather than obscuration. But open source code can also be very insecure due to bad design, and those flaws are open to anyone to see and exploit. And it requires people taking the time and effort to actually review and fix the code. There is less incentive to do that in some ways as it is currently less targeted.

However there are a lot more benefits to open source beyond that, including transparency, audit, and collaboration. It’s those benefits together that make open source compelling.

Security is also more than being hacked. There are lots of examples of closed source software doing things to benefit it’s makers rather than its users - scraping user data for example and sending it home to be exploited. It’s harder to hide in open source software, but someone also has to take the time to look.

Not really, windows is most targeted because it’s most used. If Linux had comparable market share it would be attacked way more.

Most of the services you use every day run on Linux servers. Even Microsoft uses Linux on their servers. And these services, not an average laptop, are the main targets of malicious actors.

The vast majority of behind-the-scenes infra that the end user never sees are open-source, even if the end-user part is proprietary. Eg. Facebook and Xwitter are proprietary, but run on open-source infrastructure like Docker, Kubernetes, Nginx etc.

Proprietary OS-s are workstation/office/home PC land. They have way more security issues due to crap coding whereas security problems with open-source server stuff are as a rule the fault of the admins misconfiguring services and not keeping their software up to date.

Linux servers are hacked left and right on a daily basis.

Linux is used a lot, though, in a lot of high value situations (servers).

Oh yeah, definitely but those tend to be different attacks than would target random consumer computers.

Being open source definitely plays a role in Linux security, but it’s minor compared to stuff like market share, user privilege, package management vs just installing random exes, different distros using different packaging systems.

Linux is the most used OS, it has many attacks every day. The problem is that you can’t see it and that’s why you think there aren’t Linux systems or attacks to it, because you can’t see them.

I like how you just ignored the comment you replied to which acknowledged linux makes up most servers and instead just argued against a guy you made up.

I didn’t ignore.

those tend to be different attacks than would target random consumer computers

That doesn’t mean attacks on Linux are minors, just different kind of attacks, because a user mistake is easier to exploit than a vulnerability in a software/code. That’s not about software mistakes that create vulnerabilities, that’s a user mistake that install malware.

open source definitely plays a role in Linux security, but it’s minor compared to stuff like market share, user privilege, package management vs just installing random exes, different distros using different packaging systems

This kind of attacks you are saying are actually the “minor” attacks that daily occurs, but normally the most effective, there is a lot of scam, but daily or hourly there are millions or billions of attacks everywhere, or that’s what my cybersecurity team at my company showed me, they are 24/7 there to never let any attack penetrate to the organization. Imperva and Cloudflare (for example) are or have powerful firewalls that block many attacks every minute. And you are comparing that to a malware that a user install.

So that’s why I am saying, because you can’t see them, doesn’t mean there aren’t attacks.

Edit: More data added on bottom.

I found this: https://www.imperva.com/cyber-threat-index/

The Cyber Threat Index is calculated using data gathered from all Imperva sensors across the world including over:

- Over 25 monthly PBs (Peta Bytes1015) of network traffic passed through our CDN

- 30 billions (109) of monthly Web application attacks, across 1 trillion (10¹²) of HTTP requests analyzed by our Web Application Firewall service (Cloud WAF)

- Hundreds of monthly application and database vulnerabilities, as processed by our security intelligence aggregation from multiple sources

open source definitely plays a role in Linux security, but it’s minor compared to stuff like market share, user privilege,

Is saying the role open source plays in Linux security is minor compared to the role other aspects play, not that the attacks are minor.

Someone hasn’t been paying attention for decades and instead chose to be confidently incorrect

You don’t need the source code to find vulnerabilities.

To fix them you almost always need access to the source code.

Open source means anyone able to read the code can find and fix vulnerabilities to prevent them from being exploited in the future. It’s just as easy to exploit closed source software through fuzzing and other means, but the only people doing that are the devs and hackers, not the thousands of other people invested in the project.

It’s much easier to slip backdoors into closed source software too.

Neither closed source nor open source is a guarantee of a quality code base.

There are white hat (good person) as well as black hat hackers.

If everyone can see the source code, there are more eyes able to spot problems and fix them.

And someone can fork the codebase if the original author or current maintainer refuses to fix major issues. Closed source software vendors refuse to do so quite frequently.

On the contrary, it’s more easy to secure because anyone can contribute. See a bug? Report it / Fix it.

Take backdoors for example. The CIA can abuse a windows backdoor all they want because we can’t see it however on Linux such a thing doesn’t exist because we have the code.

And even if a 0-day exploit was found and used, it would get patched really fast, it would be up to the user to do his due diligence and update.

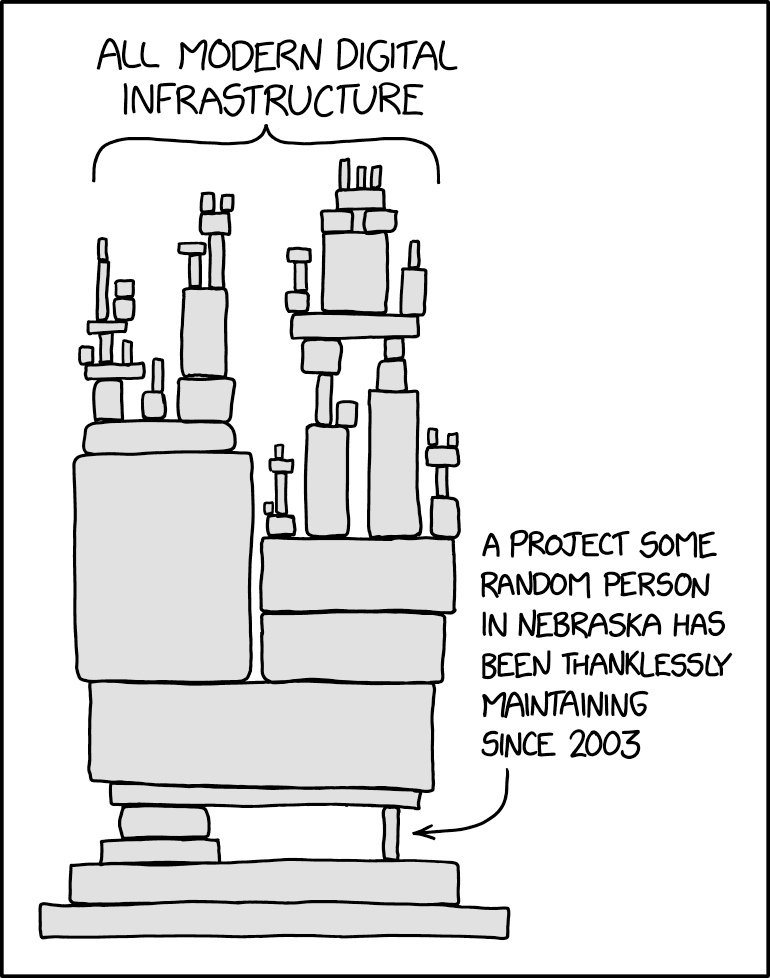

This is where most of the problems in open source come from. Just because anyone can look at the source code doesn’t mean that anyone actually is. It frequently seems that everyone just assumes that popular/common libraries have been reviewed and vetted, but never bother to check for themselves unless they happen to work in application security. It’s like Douglas Adams’ SEP field. And many common modules became common because they were convenient and/or easy to use, not because they were rigorously developed and tested with strong security principles.

Of course expecting every user to inspect the source of every piece of software they use, every time it gets an update, is utterly ridiculous. No one would ever actually use anything.

With closed source, the problem is that you can’t see the code so you need to be sure that you trust the developer. With open source, the problem is spaghetti code (and worse, spaghetti dependencies) so again you need to be sure that you trust the developer(s).

Yeah thats my concern as well

Either way, the issue is trust. With popular/widely used open source projects, you can at least democratize trust to some extent (many people have worked on this, and many more have used it). Smaller projects are more risky. This is true for proprietary software also - generally, Microsoft is putting effort into fixing vulnerabilities in their products, but if you buy specialty software from a small business with a registered address in Ireland but actually based out of Moldova, they will probably have different quality standards.

Whether open or closed, you should try to understand the incentive model of the developers. Is it paid software? Is there a license agreement? Is it ad supported? Or donation supported? Is it a volunteer project? Is it collecting data about its users?

Some open source software is developed by companies but distributed freely. Bitwarden is a great example of this. It’s probably the best password manager out there right now. It’s free for individual use and for self hosting. The company makes money by selling implementation and support services to businesses. This model has a lot of benefits, and the code projects that come out of such companies are generally very stable and trustworthy.

The trust issue is slightly different in form between open and closed source, but ultimately it’s the same issue. If the security of what you’re doing matters, then you need to know who you’re working with and whether their interests align with yours.

Smaller projects are more risky. This is true for proprietary software also - generally,

Not necessarily. Large commercial vendors might be much more likely to kill off one of many projects, even large ones, than a small vendor is to kill off their only project.

Hold up, hold up. “on linux such a thing doesnt exist” is a very bold statement for something containing millions of lines of code.

There where and will be more then enough zero days in Linux, be it because of malice or incompetence.

Open source doesn’t say anything about the quality of the code.

Ever heard of log4j? Open source code…

That part is about backdoors, not zero days. However, even still backdoors may exist. Linux has libraries and other code, as well as code that hasn’t been checked well enough, than could contain backdoors. It’s less likely than Windows, but still possible.

I’ve heard from “reputable sources” (internet schizos) that every cpu since 2010 has been backdoored by the nsa. This can be exploited on any platform.

There’s the Intel management engine and the amd platform security processor. Both manage low level tasks like booting, and have access to network data. Amds psp is known to have unrestricted access to user memory.

There have been security vulnerabilities that would grant access to sensitive data exploiting both systems if not patched.

As for a backdoor, there’s no evidence but I wouldn’t be surprised. The NSA has programs to insert backdoors into consumer products and these seem like the perfect place to do it. But again, there’s no evidence either chip is part of these programs.

Putting aside the idea that everyone can read the code and find/fix exploits, sometimes it’s good to be open to vulnerabilities because you know where/what to fix.

Sometimes closed code it’s exploited for years before the owner or general public finds out, and that leads to more problems.

No, in general the code quality in large open source projects is just as good as in proprietary programs - for large projects, the majority of contributions come from professional software developers being paid to work on the project either by their employer or via grant funding, and those that aren’t still get their changes reviewed by professionals, same as everyone else.

Smaller projects tend to be a mixed bag - the internet has a “one guy who has been maintaining this absolutely critical piece of infrastructure unpaid on evenings and weekends for the past decade” problem, but even then open source has one major advantage - transparency.

If the code is open source, it’s relatively easy for anyone to look at the code and spot bugs - even if the first person to find a bug is a bad guy who keeps the bug secret, the odds are pretty good that someone else will also find the bug and tell the developers about it so they can fix it, and tell the programs’ uses about it so they know that they need to take action to protect themselves.

For proprietary programs, there is a much stronger incentive to keep bugs secret, both for bad guys (it’s harder to find bugs if you can’t look at the code, so the odds of your useful bug being publicized is lower) and for the developers (bugs are bad for business and cost us money, so we’ll sue you if you publish). Some larger players have “bug bounties” - if you find a bug and report it to us under embargo so we can fix it before you publish, we’ll pay you - because being perceived as having a secure, trustworthy product is worth the cost, but these are often more marketing tools than actual security features

Yes and no. While people can read the code and potentially exploit it, the opposite is also true. Having full access to it means others can find flaws in the code you’ve missed and contribute improvements to it. Being closed-source can be a detriment in that regard, so much so companies often rely on external security audits and the like.

Good question. I’ve always wondered in general about open source and hackability. Like is Linux much more hackable then Windows or Mac ?

It depends on the distro but generally Linux is much more secure because it doesn’t have backdoors built in for weird shit like codecs and registration.

They are equally exploitable, but those exploits are generally easier to find and fix on open source software than closed.

As an example, look at the exploit chain Apple had only patched recently, “TriangleDB”. The exploit relied on several security flaws and undocumented functions, and it was used extensively in state-sponsored malware such as Pegasus for years. If any part of the exploit chain were patched, the malware wouldn’t have worked. It took a Russian cybersecurity firm a significant effort to track down how the exploit worked when they found out they were being targeted by the malware.

Security through obscurity is a notoriously sophomoric strategy that won’t keep out a dedicated attacker

That and some major proprietary software has had built-in backdoors for decades at this point, I’m pretty sure (I think this is more of a Windows than an Apple thing, but Apple has its own issues)