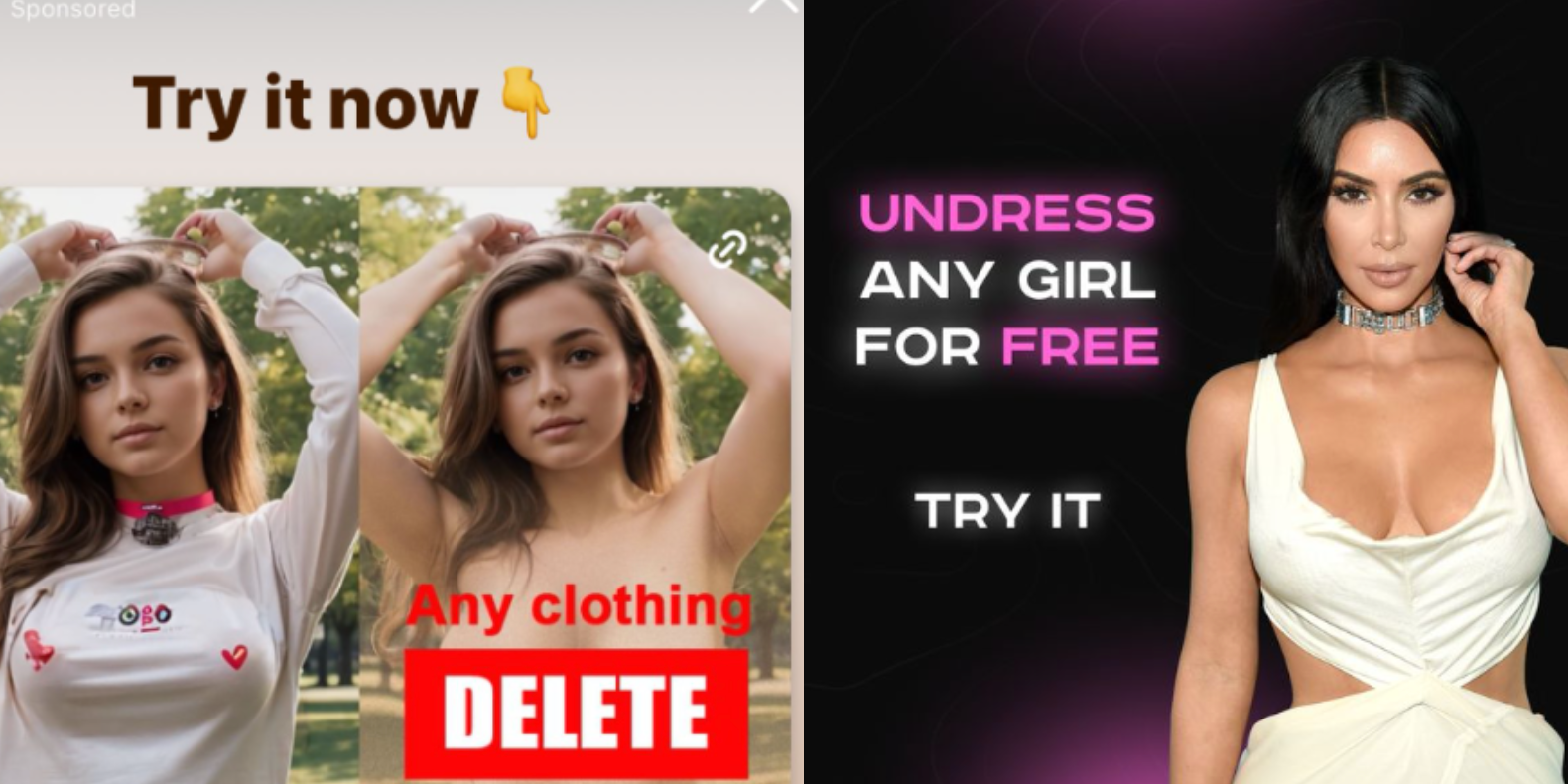

Instagram is profiting from several ads that invite people to create nonconsensual nude images with AI image generation apps, once again showing that some of the most harmful applications of AI tools are not hidden on the dark corners of the internet, but are actively promoted to users by social media companies unable or unwilling to enforce their policies about who can buy ads on their platforms.

While parent company Meta’s Ad Library, which archives ads on its platforms, who paid for them, and where and when they were posted, shows that the company has taken down several of these ads previously, many ads that explicitly invited users to create nudes and some ad buyers were up until I reached out to Meta for comment. Some of these ads were for the best known nonconsensual “undress” or “nudify” services on the internet.

Seen similar stuff on TikTok.

That’s the big problem with ad marketplaces and automation, the ads are rarely vetted by a human, you can just give them money, upload your ad and they’ll happily display it. They rely entirely on users to report them which most people don’t do because they’re ads and they wont take it down unless it’s really bad.

It’s especially bad on reels/shorts for pretty much all platforms. Tons of financial scams looking to steal personal info or worse. And I had one on a Facebook reel that was for boner pills that was legit a minute long as of hardcore porn. Not just nudity but straight up uncensored fucking.

The user reports are reviewed by the same model that screened the ad up-front so it does jack shit

Actually, a good 99% of my reports end up in the video being taken down. Whether it’s because of mass reports or whether they actually review it is unclear.

What’s weird is the algorithm still seems to register that as engagement, so lately I’ve been reporting 20+ videos a day because it keeps showing them to me on my FYP. It’s wild.

That’s a clever way of getting people to work for them as moderators.

Okay this is going to be one of the amazingly good uses of the newer multimodal AI, it’ll be able to watch every submission and categorize them with a lot more nuance than older classifier systems.

We’re probably only a couple years away from seeing a system like that in production in most social media companies.

Nice pipe dream, but the current fundamental model of AI is not and cannot be made deterministic. Until that fundamental chamge is developed, it isnt possible.

the current fundamental model of AI is not and cannot be made deterministic.

I have to constantly remind people about this very simple fact of AI modeling right now. Keep up the good work!

What do you mean? AI absolutely can be made deterministic. Do you have a source to back up your claim?

You know what’s not deterministic? Human content reviewers.

Besides, determinism isn’t important for utility. Even if AI classified an ad wrong 5% of the time, it’d still massively clean up the spammy advertisers. But they’re far, FAR more accurate than that.

https://www.sitation.com/non-determinism-in-ai-llm-output/

AI can be made deterministic, yes, absolutely.

The current design everyone is using(LLMs) cannot be made deterministic.

It’s all so incredibly gross. Using “AI” to undress someone you know is extremely fucked up. Please don’t do that.

I’m going to undress Nobody. And give them sexy tentacles.

Behold my meaty, majestic tentacles. This better not awaken anything in me…

Same vein as “you should not mentally undress the girl you fancy”. It’s just a support for that. Not that i have used it.

Don’t just upload someone else’s image without consent, though. That’s even illegal in most of europe.

Why you should not mentally undress the girl you fancy (or not, what difference does it make?)? Where is the harm of it?

Where is the harm of it

there is none, that’s their point

Would it be any different if you learn how to sketch or photoshop and do it yourself?

You say that as if photoshopping someone naked isnt fucking creepy as well.

Creepy, maybe, but tons of people have done it. As long as they don’t share it, no harm is done.

Yes, because the AI (if not local) will probably store the images on their Servers

good point

This is the only good answer.

This is also fucking creepy. Don’t do this.

I am not saying anyone should do it and don’t need some internet stranger to police me thankyouverymuch.

Can you articulate why, if it is for private consumption?

Consent.

You might be fine with having erotic materials made of your likeness, and maybe even of your partners, parents, and children. But shouldn’t they have right not to be objectified as wank material?

I partly agree with you though, it’s interesting that making an image is so much more troubling than having a fantasy of them. My thinking is that it is external, real, and thus more permanent even if it wouldn’t be saved, lost, hacked, sold, used for defamation and/or just shared.

To add to this:

Imagine someone would sneak into your home and steal your shoes, socks and underwear just to get off on that or give it to someone who does.

Wouldn’t that feel wrong? Wouldn’t you feel violated? It’s the same with such AI porn tools. You serve to satisfy the sexual desires of someone else and you are given no choice. Whether you want it or not, you are becoming part of their act. Becoming an unwilling participant in such a way can feel similarly violating.

They are painting and using a picture of you, which is not as you would like to represent yourself. You don’t have control over this and thus, feel violated.

This reminds me of that fetish, where one person is basically acting like a submissive pet and gets treated like one by their “master”. They get aroused by doing that in public, one walking with the other on a leash like a dog on hands and knees. People around them become passive participants of that spectactle. And those often feel violated. Becoming unwillingly, unasked a participant, either active or passive, in the sexual act of someone else and having no or not much control over it, feels wrong and violating for a lot of people.

In principle that even shares some similarities to rape.There are countries where you can’t just take pictures of someone without asking them beforehand. Also there are certain rules on how such a picture can be used. Those countries acknowledge and protect the individual’s right to their image.

Just to play devils advocate here, in both of these scenarios:

Imagine someone would sneak into your home and steal your shoes, socks and underwear just to get off on that or give it to someone who does.

This reminds me of that fetish, where one person is basically acting like a submissive pet and gets treated like one by their “master”. They get aroused by doing that in public, one walking with the other on a leash like a dog on hands and knees. People around them become passive participants of that spectactle. And those often feel violated.

The person has the knowledge that this is going on. In he situation with AI nudes, the actual person may never find out.

Again, not to defend this at all, I think it’s creepy af. But I don’t think your arguments were particularly strong in supporting the AI nudes issue.

Traumatizing rape victims with non consentual imagery of them naked and doing sexual things with others and sharing it is totally not going yo fuck up the society even more and lead to a bunch of suicides! /s

Ai is the future. The future is dark.

tbf, the past and present are pretty dark as well

it is external, real, and thus more permanent

Though just like your thoughts, the AI is imagining the nude parts aswell because it doesn’t actually know what they look like. So it’s not actually a nude picture of the person. It’s that person’s face on a entirely fictional body.

But the issue is not with the AI tool, it’s with the human wielding it for their own purposes which we find questionable.

An exfriend of mine Photoshopped nudes of another friend. For private consumption. But then someone found that folder. And suddenly someones has to live with the thought that these nudes, created without their consent, were used as spank bank material. Its pretty gross and it ended the friendship between the two.

You can still be wank material with just your Facebook pictures.

Nobody can stop anybody from wanking on your images, AI or not.

Thats already weird enough, but there is a meaningful difference between nude pictures and clothed pictures. If you wanna whack one to my fb pics of me looking at a horse, ok, weird. Dont fucking create actual nude pictures of me.

Louis CK has also sexually harassed a number of women( jerking off in front of unwilling viewers)…

Here is an alternative Piped link(s):

Piped is a privacy-respecting open-source alternative frontend to YouTube.

I’m open-source; check me out at GitHub.

If you have to ask, you’re already pretty skeevy.

And if you have to say that, you’re already sounding like some judgy jerk.

The fact that you do not even ask such questions, shows that you are narrow minded. Such mentality leads to people thinking that “homosexuality is bad” and never even try to ask why, and never having chance of changing their mind.

Mhmm. Cool.

They cannot articulate why. Some people just get shocked at “shocking” stuff… maybe some societal reaction.

I do not see any issue in using this for personal comsumption. Yes, I am a woman. And yes people can have my fucking AI generated nudes as long as they never publish it online and never tell me about it.

The problem with these apps is that they enable people to make these at large and leave them to publish them freely wherever. This is where the dabger lies. Not in people jerking off to a picture of my fucking cunt alone in a bedroom.

It’s creepy and can lead to obsession, which can lead to actual harm for the individual.

I don’t think it should be illegal, but it is creepy and you shouldn’t do it. Also, sharing those AI images/videos could be illegal, depending on how they’re represented (e.g. it could constitute libel or fraud).

I disagree. I think it should be illegal. (And stay that way in countries where it’s already illegal.) For several reasons. For example, you should have control over what happens with your images. Also, it feels violating to become unwillingly and unasked part of the sexual act of someone else.

That sounds problematic though. If someone takes a picture and you’re in it, how do they get your consent to distribute that picture? Or are they obligated to cut out everyone but those who consent? What does that mean for news orgs?

That seems unnecessarily restrictive on the individual.

At least in the US (and probably lots of other places), any pictures taken where there isn’t a reasonable expectation of privacy (e.g. in public) are subject to fair use. This generally means I can use it for personal use pretty much unrestricted, and I can use it publicly in a limited capacity (e.g. with proper attribution and not misrepresented).

Yes, it’s creepy and you’re justified in feeling violated if you find out about it, but that doesn’t mean it should be illegal unless you’re actually harmed. And that barrier is pretty high to protect peoples’ rights to fair use. Without fair use, life would suck a lot more than someone doing creepy things in their own home with pictures of you.

So yeah, don’t do creepy things with other pictures of other people, that’s just common courtesy. But I don’t think it should be illegal, because the implications of the laws needed to get there are worse than the creepy behavior of a small minority of people.

Can you provide an example of when a photo has been taken that breaches the expectation of privacy that has been published under fair use? The only reason I could think that would work is if it’s in the public interest, which would never really apply to AI/deepfake nudes of unsuspecting victims.

I’m not really sure how to answer that. Fair use is a legal term that limits the “expectation of privacy” (among other things), so by definition, if a court finds it to be fair use, it has also found that it’s not a breach of the reasonable expectation of privacy legal standard. At least that’s my understanding of the law.

So my best effort here is tabloids. They don’t serve the public interest (they serve the interested public), and they violate what I consider a reasonable expectation of privacy standard, with my subjective interpretation of fair use. But I disagree with the courts quite a bit, so I’m not a reliable standard to go by, apparently.

Fair use laws relate to intellectual property, privacy laws relate to an expectation of privacy.

I’m asking when has fair use successfully defended a breach of privacy.

Tabloids sometimes do breach privacy laws, and they get fined for it.

Would you like if someone were to make and wank to these pictures of your kids, wife or parents ? The fact that you have to ask speaks much about you tho.

The fact that people don’t realize how these things can be used for bad and weaponized is insane. I mean, it shows they clearly are not part of the vulnerable group of people and their privilege of never having dealt with it.

The future is amazing! Everyone with apps going to the parks and making some kids nude. Or bullying which totally doesn’t happen in fucked up ways with all the power of the internet already.

There are plenty of things I might not like that aren’t illegal.

I’m interested in thr thought experiment this has brought up, but I don’t want us to get caught in a reactionary fervor because of AI.

AI will make this easier to do, but people have been clipping magazines and celebrities have had photoshops fakes created since both mediums existed. This isn’t new, but it is being commoditized.

My take is that these pictures shouldn’t be illegal to own or create, but they should be illegal to profit off of and distribute, meaning these tools specifically designed and marketed for it would be banned. If someone wants to tinker at home with their computer, yoipl never be able to ban that, and you’ll never be able to ban sexual fantasy.

I think it should be illigal even photoshops of celebs they too are human and have emotions.

Intersting how we can “undress any girl” but I have not seen a tool to “undress any boy” yet. 😐

I don’t know what it says about people developing those tools. (I know, in fact)

Make one :P

Then I suspect you’ll find the answer is money. The ones for women simply just make more money.

This

I’ve seen a tool like that. Everyone was a bodybuilder and Hung like a horse.

I’m going to guess all the ones of women have bolt on tiddies and no pubic hair.

Well of course. Sagging breasts are gross /s

Thats what we all look like.

Don’t check though

Gotta wonder where they get their horse dick training images from

notices ur instance

Can’t judge though, I have a Chance myself lawl

Be the change you wish to see in the world

\s

You probably don’t need them. You can get these photos without even trying. Is a bit of a problem really.

You probably can with the same inpainting stable diffusion tools, it’s just not as heavily advertised.

Probably because the demand is not big or visible enough to make the development worth it, yet.

You don’t need to make fake nudes of guys - you can just ask. Hell, they’ll probably send you one even without asking.

In general women aren’t interested in that anyway and gay guys don’t need it because, again, you can just ask.

It remains fascinating to me how these apps are being responded to in society. I’d assume part of the point of seeing someone naked is to know what their bits look like, while these just extrapolate with averages (and likely, averages of glamor models). So we still dont know what these people actually look like naked.

And yet, people are still scorned and offended as if they were.

Technology is breaking our society, albeit in place where our culture was vulnerable to being broken.

And yet, people are still scorned and offended as if they were.

I think you are missing the plot here… If a naked pic of yourself, your mother, your wife, your daughter is circulating around the campus, work or just online… Are you really going to be like “lol my nipples are lighter and they don’t know” ??

You may not get that job, promotion, entry into program, etc. The harm done by naked pics in public would just as real weather the representation is accurate or not … And that’s not even starting to talk about the violation of privacy and overall creepiness of whatever people will do with that pic of your daughter out there

I believe their point is that an employer logically shouldn’t care if some third party fabricates an image resembling you. We still have an issue with latent puritanism, and this needs to be addressed as we face the reality of more and more convincing fakes of images, audio, and video.

I agree… however, we live in the world we live in, where employers do discriminate as much as they can before getting in trouble with the law

I think the only thing we can do is to help out by calling this out. AI fakes are just advanced gossip, and people need to realize that.

This is a transitional period issue. In a couple of years you can just say AI made it even if it’s a real picture and everyone will believe you. Fake nudes are in no way a new thing anyway. I used to make dozens of these by request back in my edgy 4chan times 15 years ago.

Dead internet.

It also means in a few years, any terrible thing someone does will just be excused as a “deep fake” if you have the resources and any terrible thing someone wants to pin on you with be cooked up in seconds. People wont just blanket believe or disbelieve any possible deep fake. They’ll cherry pick what to believe based on their preexisting world view and how confident the story telling comes across.

As far as your old edits go, if they’re anything like the ones I saw, they were terrible and not believable at all.

I’m still on the google prompt bandwagon of typing this query:

stuff i am searching for before:2023… or ideally, even before COVID19, if you want more valuable, less tainted results. It’s only going to get worse from here, 2024 is the year of saturation with garbage data on the web (yes I know it was already bad before, but now AI is pumping this shit out at an industrial scale.)

This is a transitional period issue. In a couple of years you can just say AI made it even if it’s a real picture and everyone will believe you.

Sure, but the question of whether they harm the victim is still real… if your prospective employer finds tons of pics of you with nazi flags, guns and drugs… they may just “play it safe” and pass on you… no matter how much you claim (or even the employer might think) they are fakes

On the other end, I welcome the widespread creation of these of EVERYONE, so that it becomes impossible for them to be believable. No one should be refused from a job/promotion because of the existence of a real one IMO and this will give plausible deniability.

People are refused for jobs/promotions on the most arbitrary basis, often against existing laws but they are impossible to enforce.

Even if it is normalized, there is always the escalation factor… sure, nobody won’t hire Anita because of her nudes out there, everyone has them and they are probably fake right?.. but Perdita? hmmm I don’t want my law firm associated in any way with her pics of tentacle porn, that’s just too much!

Making sure we are all in the gutter is not really a good way to deal with this issue… specially since it will, once again, impact women 100x more than it will affect men

I suspect it’s more affecting for younger people who don’t really think about the fact that in reality, no one has seen them naked. Probably traumatizing for them and logic doesn’t really apply in this situation.

Does it really matter though? “Well you see, they didn’t actually see you naked, it was just a photorealistic approximation of what you would look like naked”.

At that point I feel like the lines get very blurry, it’s still going to be embarrassing as hell, and them not being “real” nudes is not a big comfort when having to confront the fact that there are people masturbating to your “fake” nudes without your consent.

I think in a few years this won’t really be a problem because by then these things will be so widespread that no one will care, but right now the people being specifically targeted by this must not be feeling great.

It depends where you are in the world. In the Middle East, even a deepfake of you naked could get you killed if your family is insane.

It depends very much on the individual apparently. I don’t have a huge data set but there are girls that I know that have had this has happened to them, and some of them have just laughed it off and really not seemed like they cared. But again they were in their mid twenties not 18 or 19.

Something like this could be career ending for me. Because of the way people react. “Oh did you see Mrs. Bee on the internet?” Would have to change my name and move three towns over or something. That’s not even considering the emotional damage of having people download you. Knowledge that “you” are dehumanized in this way. It almost takes the concept of consent and throws it completely out the window. We all know people have lewd thoughts from time to time, but I think having a metric on that…it would be so twisted for the self-image of the victim. A marketplace for intrusive thoughts where anyone can be commodified. Not even celebrities, just average individuals trying to mind their own business.

Exactly. I’m not even shy, my boobs have been out plenty and I’ve sent nudes all that. Hell I met my wife with my tits out. But there’s a wild difference between pictures I made and released of my own will in certain contexts and situations vs pictures attempting to approximate my naked body generated without my knowledge or permission because someone had a whim.

I think you might be overreacting, and if you’re not, then it says much more about the society we are currently living in than this particular problem.

I’m not promoting AI fakes, just to be clear. That said, AI is just making fakes easier. If you were a teacher (for example) and you’re so concerned that a student of yours could create this image that would cause you to pick up and move your life, I’m sad to say they can already do this and they’ve been able to for the last 10 years.

I’m not saying it’s good that a fake, or an unsubstantiated rumor of an affair, etc can have such big impacts on our life, but it is troubling that someone like yourself can fear for their livelihood over something so easy for anyone to produce. Are we so fragile? Should we not worry more about why our society is so prudish and ostracizing to basic human sexuality?

Wtf are you even talking about? People should have the right to control if they are “approximated” as nude. You can wax poetic how it’s not nessecarily correct but that’s because you are ignoring the woman who did not consent to the process. Like, if I posted a nude then that’s on the internet forever. But now, any picture at all can be made nude and posted to the internet forever. You’re entirely removing consent from the equation you ass.

Totally get your frustration, but people have been imagining, drawing, and photoshopping people naked since forever. To me the problem is if they try and pass it off as real. If someone can draw photorealistic pieces and drew someone naked, we wouldn’t have the same reaction, right?

It takes years of pratice to draw photorealism, and days if not weeks to draw a particular piece. Which is absolutely not the same to any jackass with an net connection and 5 minutes to create a equally/more realistic version.

It’s really upsetting that this argument keeps getting brought up. Because while guys are being philosophical about how it’s therotically the same thing, women are experiencing real world harm and harassment from these services. Women get fired for having nudes, girls are being blackmailed and bullied with this shit.

But since it’s theoretically always been possible somehow churning through any woman you find on Instagram isn’t an issue.

Totally get your frustration

Do you? Since you aren’t threatened by this, yet another way for women to be harassed is just a fun little thought experiment.

Well that’s exactly the point from my perspective. It’s really shitty here in the stage of technology where people are falling victim to this. So I really understand people’s knee jerk reaction to throw on the brakes. But then we’ll stay here where women are being harassed and bullied with this kind of technology. The only paths forward, theoretically, are to remove it all together or to make it ubiquitous background noise. Removing it all together, in my opinion, is practically impossible.

So my point is that a picture from an unverified source can never be taken as truth. But we’re in a weird place technologically, where unfortunately it is. I think we’re finally reaching a point where we can break free of that. If someone sends me a nude with my face on it like, “Is this you?!!”. I’ll send them one back with their face like, “Is tHiS YoU?!??!”.

We’ll be in a place where we as a society cannot function taking everything we see on the internet as truth. Not only does this potentially solve the AI nude problem, It can solve the actual nude leaks / revenge porn, other forms of cyberbullying, and mass distribution of misinformation as a whole. The internet hasn’t been a reliable source of information since its inception. The problem is, up until now, its been just plausible enough that the gullible fall into believing it.

How dare that other person i don’t know and will never meet gain sexual stimulation!

My body is not inherently for your sexual simulation. Downloading my picture does not give you the right to turn it in to porn.

Did you miss what this post is about? In this scenario it’s literally not your body.

There is nothing stopping anyone from using it on my body. Seriously, get a fucking grip.

You get to tell me what i can and cannot think about in my own head?

I think half the people who are offended don’t get this.

The other half think that it’s enough to cause hate.

Both arguments rely on enough people being stupid.

So many of these comments are breaking down into argumenrs of basic consent for pics, and knowing how so many people are, I sure wonder how many of those same people post pics of their kids on social media constantly and don’t see the inconsistency.

There isn’t really many good reasons to post your kid’s picture anyway.

Yet another example of multi billion dollar companies that don’t curate their content because it’s too hard and expensive. Well too bad maybe you only profit 46 billion instead of 55 billion. Boo hoo.

It’s not that it’s too expensive, it’s that they don’t care. They won’t do the right thing until and unless they are forced to, or it affects their bottom line.

An economic entity cannot care, I don’t understand how people expect them to. They are not human

Economic Entities aren’t robots, they’re collections of people engaged in the act of production, marketing, and distribution. If this ad/product exists, its because people made it exist deliberately.

No they are slaves to the entity.

They can be replaced

Everyone from top to bottom can be replaced

And will be unless they obey the machine’s will

It’s crazy talk to deny this fact because it feels wrong

It’s just the truth and yeah, it’s wrong

Everyone from top to bottom can be replaced

Once you enter the actual business sector and find out how much information is siloed or sequestered in the hands of a few power users, I think you’re going to be disappointed to discover this has never been true.

More than one business has failed because a key member of the team left, got an ill-conceived promotion, or died.

Your example is 9 billion difference. This would not cost 9 billion. It wouldn’t even cost 1 billion.

Well too bad maybe you only profit 46 billion instead of 55 billion.

I can’t possibly imagine this quality of clickbait is bringing in $9B annually.

Maybe I’m wrong. But this feels like the sort of thing a business does when its trying to juice the same lemon for the fourth or fifth time.

AI gives creative license to anyone who can communicate their desires well enough. Every great advancement in the media age has been pushed in one way or another with porn, so why would this be different?

I think if a person wants visual “material,” so be it. They’re doing it with their imagination anyway.

Now, generating fake media of someone for profit or malice, that should get punishment. There’s going to be a lot of news cycles with some creative perversion and horrible outcomes intertwined.

I’m just hoping I can communicate the danger of some of the social media platforms to my children well enough. That’s where the most damage is done with the kind of stuff.

The porn industry is, in fact, extremely hostile to AI image generation. How can anyone make money off porn if users simply create their own?

Also I wouldn’t be surprised if the it’s false advertising and in clicking the ad will in fact just take you to a webpage with more ads, and a link from there to more ads, and more ads, and so on until eventually users either give up (and hopefully click on an ad).

Whatever’s going on, the ad is clearly a violation of instagram’s advertising terms.

I’m just hoping I can communicate the danger of some of the social media platforms to my children well enough. That’s where the most damage is done with the kind of stuff.

It’s just not your children you need to communicate it to. It’s all the other children they interact with. For example I know a young girl (not even a teenager yet) who is being bullied on social media lately - the fact she doesn’t use social media herself doesn’t stop other people from saying nasty things about her in public (and who knows, maybe they’re even sharing AI generated CSAM based on photos they’ve taken of her at school).

I think old people are the ones less likely to understand this stuff.

How old? My parents certainly understand this, may great-parants not so much and my son not yet (5yo)

How can anyone make money off porn if users simply create their own?

What, you mean like amateur porn or…?

Seems like professional porn still does great after over two decades of free internet porn so…

I guess they will solve this one the same way, by having better production quality. 🤷

Good, let all celebs come together and sue zuck into the ground

Its funny how many people leapt to the defense of Title V of the Telecommunications Act of 1996 Section 230 liability protection, as this helps shield social media firms from assuming liability for shit like this.

Sort of the Heads-I-Win / Tails-You-Lose nature of modern business-friendly legislation and courts.

youtube has been for like 6 or 7 months. even with famous people in the ads. I remember one for a while with Ortega

That bidding model for ads should be illegal. Alternatively, companies displaying them should be responsible/be able to tell where it came from. Misinformarion has become a real problem, especially in politics.

This reminded me of those kid’s who made pornographic so videos of their classmates.

“Major Social Media Company Profits From App That Creats Unauthorized Nudes! Pay Us So You Can Read About It!”

What a shitshow.

404 Media is worker owned; you should pay them.

Is there such a thing as a consensual undressing app? Seems redundant

I assume that’s what you’d call OnlyFans.

That said, the irony of these apps is that its not the nudity that’s the problem, strictly speaking. Its taking someone’s likeness and plastering it on a digital manikin. What social media has done has become the online equivalent of going through a girl’s trash to find an old comb, pulling the hair off, and putting it on a barbie doll that you then use to jerk/jill off.

What was the domain of 1980s perverts from comedies about awkward high schoolers has now become a commodity we’re supposed to treat as normal.

Idk how many people are viewing this as normal, I think most of us recognize all of this as being incredibly weird and creepy.

Idk how many people are viewing this as normal

Maybe not “Lemmy” us. But the folks who went hog wild during The Fappening, combined with younger people who are coming into contact with pornography for the first time, make a ripe base of users who will consider this the new normal.

Am I the only one who doesn’t care about this?

Photoshop has existed for some time now, so creating fake nudes just became easier.

Also why would you care if someone jerks off to a photo you uploaded, regardless of potential nude edits. They can also just imagine you naked.

If you don’t want people to jerk off to your photos, don’t upload any. It happens with and without these apps.

But Instagram selling apps for it is kinda fucked, since it’s very anti-porn, but then sells apps for it (to children).

It’s about consent. If you have no problem with people jerking off to your pictures, fine, but others do.

If you don’t want people to jerk off to your photos, don’t upload any. It happens with and without these apps.

You get that that opinion is pretty much the same as those who say if she didn’t want to be harrassed she shouldn’t have worn such provocative clothing!?

How about we allow people to upload whatever pictures they want and try to address the weirdos turning them into porn without consent, rather than blaming the victims?

Nah it’s more like: If she didn’t want people to jerk off thinking about her, she shouldn’t have worn such provocative clothing.

I honestly don’t think we should encourage uploading this many photos as private people, but that’s something else.

You don’t need consent to jerk off to someone’s photos. You do need consent to tell them about it. Creating images is a bit riskier, but if you make sure no one ever sees them, there is no actual harm done.

I think it’s clear you have never experienced being sexualized when you weren’t okay with it. It’s a pretty upsetting experience that can feel pretty violating. And as most guys rarely if ever experience being sexualized, never mind when they don’t want to be, I’m not surprised people might be unable to emphasize

Having experienced being sexualized when I wasn’t comfortable with it, this kind of thing makes me kinda sick to be honest. People are used to having a reasonable expectation that posting safe for work pictures online isn’t inviting being sexualized. And that it would almost never be turned into pornographic material featuring their likeness, whether it was previously possible with Photoshop or not.

It’s not surprising people would find the loss of that reasonable assumption discomforting given how uncomfortable it is to be sexualized when you don’t want to be. How uncomfortable a thought it is that you can just be going about your life and minding your own business, and it will now be convenient and easy to produce realistic porn featuring your likeness, at will, with no need for uncommon skills not everyone has

Interesting (wrong) assumption there buddy.

But why would I care how people think of me? If it influences their actions, we gonna start to have problems, tho.

Also why would you care if someone jerks off to a photo you uploaded, regardless of potential nude edits. They can also just imagine you naked.

Imagining and creating physical (even digial) material are different levels of how real and tangible it feels. Don’t you think?

There is an active act of carefully editing those pictures involved. It’s a misuse and against your intention when you posted such a picture of yourself. You are loosing control by that and become unwillingly part of the sexual act of someone else.

Sure, those, who feel violated by that, might also not like if people imagine things, but that’s still a less “real” level.

For example: Imagining to murder someone is one thing. Creating very explicit pictures about it and watching them regularly, or even printing them and hanging them on the walls of one’s room, is another.

I don’t want to equate murder fantasies with sexual ones. My point is to illustrate that it feels to me and obviously a lot of other people that there are significant differences between pure imagination and creating something tangible out of it.Oh no, hanging the pictures on your wall is fucked.

The difference is if someone else can reasonably find out. If I tell someone that I think about them/someone else while masturbating, that is sexual harassment. If I have pictures on my wall and guests could see them, that’s sexual harassment.

If I just have an encrypted folder, not a problem.

It’s like the difference between thinking someone is ugly and saying it.

Plz don look at my digital pp, it make me sad… Maybe others have different feeds but the IG ad feed I know and love promotes counterfeit USD, MDMA, mushrooms, mail order brides, MLM schemes, gun building kits and all kinds of cool shit (all scams through telegram)…so why would they care about an AI image generator that puts digital nipples and cocks on people. Does that mean I can put a cock on Hilary and bobs/vagenes on trump? asking for a friend.