I’ll admit I did used AI for code before, but here’s the thing. I already coded for years, and I usually try everything before last resort things. And I find that approach works well. I rarely needed to go to the AI route. I used it like for .11% of my coding work, and I verified it through stress testing.

Microsoft is doing this today. I can’t link it because I’m on mobile. It is in dotnet. It is not going well :)

Yeah, can’t find anything on dotnet getting poisoned by AI slop, so until you link it, I’ll assume you’re lying.

I guess they were referring to this.

OMG, this is gold! My neighbor must have wondered why I am laughing so hard…

The “reverse centaur” comment citing Cory Doctorow is so true it hurts - they want that people serve machines and not the other way around. That’s exactly how Amazon’s warehouses work with workers being paced by facory floor robots.

AI is at its most useful in the early stages of a project. Imagine coming to the fucking ssh project with AI slop thinking it has anything of value to add 😂

The early stages of a project is exactly where you should really think hard and long about what exactly you do want to achieve, what qualities you want the software to have, what are the detailed requirements, how you test them, and how the UI should look like. And from that, you derive the architecture.

AI is fucking useless at all of that.

In all complex planned activities, laying the right groundwork and foundations is essential for success. Software engineering is no different. You won’t order a bricklayer apprentice to draw the plan for a new house.

And if your difficulty is in lacking detailed knowledge of a programming language, it might be - depending on the case ! - the best approach to write a first prototype in a language you know well, so that your head is free to think about the concerns listed in paragraph 1.

the best approach to write a first prototype in a language you know well

Ok, writing a web browser in POSIX shell using yad now.

I’m going back to TurboBASIC.

writing a web browser in POSIX shell

Not HTML but the much simpler Gemini protocol - well you could have a look at Bollux, a Gemini client written im shell, or at ereandel:

https://github.com/kr1sp1n/awesome-gemini?tab=readme-ov-file#terminal

AI is only good for the stage when…

AI is only good in case you want to…

Can’t think of anything. Edit: yes, I really tried

Playing the Devils’ advocate was easier that being AI’s advocate.

I might have said it to be good in case you are pitching a project and want to show some UI stuff maybe, without having to code anything.

But you know, there are actually specialised tools for that, which UI/UX designers used, to show my what I needed to implement.

And when I am pitching UI, I just use a pencil and paper and it is so much more efficient than anything AI, because I don’t need to talk to something, to make a mockup, to be used to talk to someone else. I can just draw it in front of the other guy with 0 preparation, right as it came into my mind and don’t need to pay for any data center usage. And if I need to go paperless, there is Whiteboards/Blackboards/Greenboards and Inkscape.After having banged my head trying to explain code to a new developer, so that they can hopefully start making meaningful contributions, I don’t want to be banging my head on something worse than a new developer, hoping that it will output something that is logically sound.

AI is good for the early stages of a project … when it’s important to create the illusion of rapid progress so that management doesn’t cancel the project while there’s still time to do so.

Ahh, so an outsourced con

mancomputer.

Its good as a glorified autocomplete.

Except that an autocomplete, with simple, lightweight and appropriate heuristics can actually make your work much easier and will not make you have to read it again and again, before you can be confident about it.

True, and it doesn’t boil the oceans and poison people’s air.

Can Open Source defend against copyright claims for AI contributions?

If I submit code to ReactOS that was trained on leaked Microsoft Windows code, what are the legal implications?

what are the legal implications?

It would be so fucking nice if we could use AI to bypass copyright claims.

“No officer, i did not write this code. I trained AI on copyright material and it wrote the code. So im innocent”

If I submit code to ReactOS that was trained on leaked Microsoft Windows code, what are the legal implications?

None. There is a good chance that leaked MS code found its way into training data, anyway.

If AI was good at coding, my game would be done by now.

FTA: The user considered it was the unpaid volunteer coders’ “job” to take his AI submissions seriously. He even filed a code of conduct complaint with the project against the developers. This was not upheld. So he proclaimed the project corrupt. [GitHub; Seylaw, archive]

This is an actual comment that this user left on another project: [GitLab]

As a non-programmer, I have zero understanding of the code and the analysis and fully rely on AI and even reviewed that AI analysis with a different AI to get the best possible solution (which was not good enough in this case).

I am not a programmer and I think it’s silly to think that AI will replace developers.

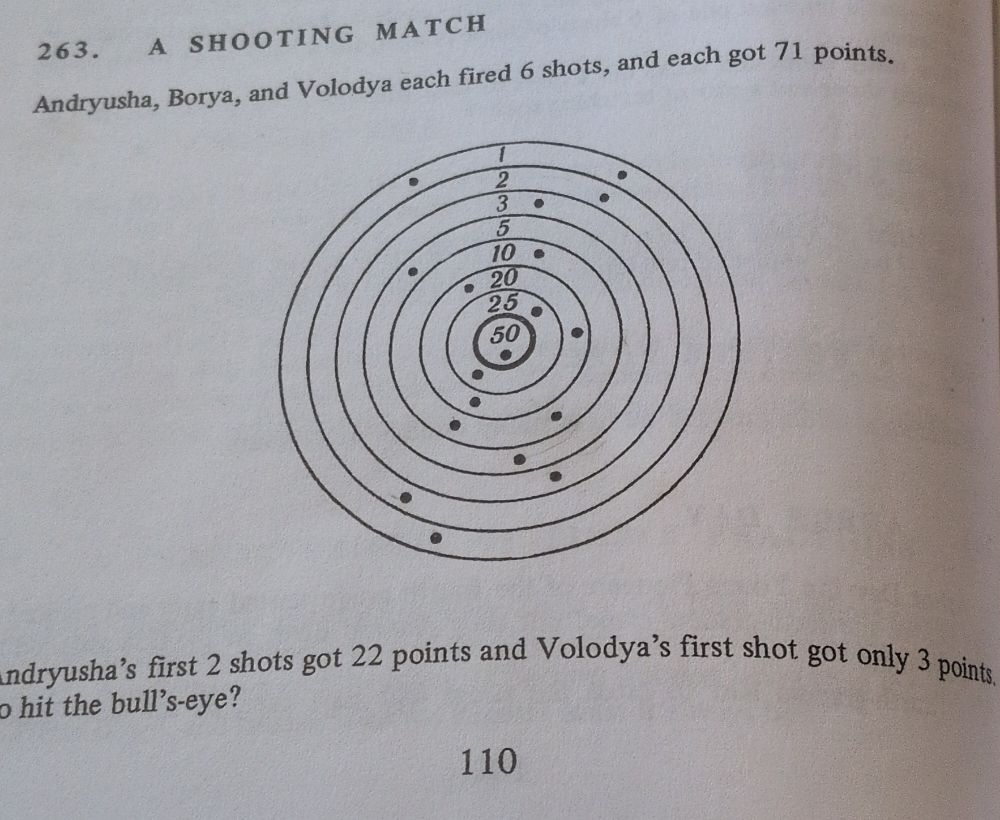

But I was working through a math problem in Moscow Puzzles with my kiddo.

We had solved it, but I wasn’t sure he got it at a deep level. So I figured I’d do something in Excel or maybe just do cut outs. But I figured I’d try to find a web app that would do this better. Nothing really came up that was a good match. But then thought, let’s see how bad AI programming can be. I’d fought with it over some excel functions and it’s been mainly useful in pointing me in the right direction, but only occasionally getting me over the finish line.

After about 6 to 8 hours of work, a little debugging, havinf teach and quiz me occasionally, and some real frustration of pointing out that the feature previously changed and re-emeged, I eventually had something that worked.

The Shooting Range Simulator is a web-based application designed to help users solve a logic puzzle involving scoring points by placing blocks on vertical number lines.

A buddy developer friend of mine said: “I took a quick scroll through the code. Looks pretty clean, but I didn’t dive in enough to really understand it. Definitely all that css BS would take me ages to do without AI.”

I don’t take credit for this and don’t pretend that this was my work, but I know my kiddo is excited to try the tool. I hope he learns from it and we bond over a math problem.

I know that everyone is worried about this tool, but moments like those are not nothing. Personally, I’m a Luddite and think the new tools should be deployed by the people’s livelihood it will effect and not the business owners.

Personally, I’m a Luddite and think the new tools should be deployed by the people’s livelihood it will effect and not the business owners.

Thank you for correctly describing what a Luddite wants and does not want.

Yes, despite the irrational phobia amongst the Lemmings, AI is massively useful across a wide range of examples like you’ve just given as it reduces barriers to building something.

As a CS grad, the problem isn’t it replacing all programmers, at least not immediately. It’s that a senior software engineer can manage a bunch of AI agents, meaning there’s less demand for developers overall.

Same way tools like Wix, Facebook, etc came in and killed the need for a bunch of web developers that operated in the range for small businesses.

As a CS grad, the problem isn’t it replacing all programmers, at least not immediately. It’s that a senior software engineer can manage a bunch of AI agents, meaning there’s less demand for developers overall.

Yes! You get it. That right there proves that you’ll make it through just fine. So many in this thread denying that Ai is gonna take jobs. But you gave a great scenario.

Microsoft has set up copilot to make contributions for the dotnet runtime https://github.com/dotnet/runtime/pull/115762 I’m sure maintainers spends more time to review and interact with copilot than it would have to write it themselves

My theory is not a lot of people like this AI crap. They just lean into it for the fear of being left behind. Now you all think it’s just gonna fail and it’s gonna go bankrupt. But a lot of ideas in America are subsidized. And they don’t work well, but they still go forward. It’ll be you, the taxpayer, that will be funding these stupid ideas that don’t work, that are hostile to our very well-being.

AI is just the lack of privacy, Authoritarian Dragnet, remote control over others computers, web scraping, The complete destruction of America’s art scene, The stupidfication of America and copyright infringement with a sprinkling of baby death.

Don’t forget subscriptions. We were freed by Linux, GCC, and all the open source tools that replaced $1000 proprietary crap. They now have that money again through AI monthly plans.

A lot of people on HackerNews have a $200 monthly subscription to have the privilege to work. It’s crazy.

Ask Daniel Stenberg.

It’s not good because it has no context on what is correct or not. It’s constantly making up functions that don’t exist or attributing functions to packages that don’t exist. It’s often sloppy in its responses because the source code it parrots is some amalgamation of good coding and terrible coding. If you are using this for your production projects, you will likely not be knowledgeable when it breaks, it’ll likely have security flaws, and will likely have errors in it.

So you’re saying I’ve got a shot?

And I’ll keep saying this: you can’t teach a neural network to understand context without creating a generalised context engine, another word for which is AGI.

Fidelity is impossible to automate.

I created this entirely using mistral/codestral

https://github.com/suoko/gotosocial-webui

Not a real software, but it was done by instructing the ai about the basics of the mother app and the fediverse protocol

I think it’s established genAI can spit straightforward toy examples of a few hundred lines. Bungalows aren’t simply big birdhouses though.

Still they’re just birdhouses with some more infrastructure you can read instructions about how to build it.

Empty readme and no comments in the code. Its useless to anyone who would want to change or fix it. It’s junior’s code and unacceptable in a professional environment.

Done in less than 4 hours while doing other things. It was just a sample. With no clue about golang.

As a dumb question from someone who doesn’t code, what if closed source organizations have different needs than open source projects?

Open source projects seem to hinge a lot more on incremental improvements and change only for the benefit of users. In contrast, closed source organizations seem to use code more to quickly develop a new product or change that justifies money. Maybe closed source organizations are more willing to accept slop code that is bad but can barely work versus open source which won’t?

Baldur Bjarnason (who hates AI slop) has posited precisely this:

My current theory is that the main difference between open source and closed source when it comes to the adoption of “AI” tools is that open source projects generally have to ship working code, whereas closed source only needs to ship code that runs.

That’s basically my question. If the standards of code are different, AI slop may be acceptable in one scenario but unacceptable in another.

Maybe closed source organizations are more willing to accept slop code that is bad but can barely work versus open source which won’t?

Because most software is internal to the organisation (therefore closed by definition) and never gets compared or used outside that organisation: Yes, I think that when that software barely works, it is taken as good enough and there’s no incentive to put more effort to improve it.

My past year (and more) of programming business-internal applications have been characterised by upper management imperatives to “use Generative AI, and we expect that to make you nerd faster” without any effort spent to figure out whether there is any net improvement in the result.

Certainly there’s no effort spent to determine whether it’s a net drain on our time and on the quality of the result. Which everyone on our teams can see is the case. But we are pressured to continue using it anyway.

I’d argue the two aren’t as different as you make them out to be. Both types of projects want a functional codebase, both have limited developer resources (communities need volunteers, business have a budget limit), and both can benefit greatly from the development process being sped up. Many development practices that are industry standard today started in the open source world (style guides and version control strategy to name two heavy hitters) and there’s been some bleed through from the other direction as well (tool juggernauts like Atlassian having new open source alternatives made directly in response)

No project is immune to bad code, there’s even a lot of bad code out there that was believed to be good at the time, it mostly worked, in retrospect we learn how bad it is, but no one wanted to fix it.

The end goals and proposes are for sure different between community passion projects and corporate financial driven projects. But the way you get there is more or less the same, and that’s the crux of the articles argument: Historically open source and closed source have done the same thing, so why is this one tool usage so wildly different?

Historically open source and closed source have done the same thing, so why is this one tool usage so wildly different?

Because, as noted by another replier, open source wants working code and closed source just want code that runs.

When did you last time decide to buy a car that barely drives?

And another thing, there are some tech companies that operate very short-term, like typical social media start-ups of which about 95% go bust within two years. But a lot of computing is very long term with code bases that are developed over many years.

The world only needs so many shopping list apps - and there exist enough of them that writing one is not profitable.

And another thing, there are some tech companies that operate very short-term, like typical social media start-ups of which about 95% go bust within two years.

This is a very generous sentence you have made, haha. My observation is that vast majority of tech companies seem to operate unprofitably (the programming division is pure cost, no measurable financial befit) and with churning bug riddled code that never really works correctly.

Netflix was briefly hugely newsworthy in the technology circles because they… Regularly did disaster recovery tests.

Edit: Netflix made news headlines because someone decided that Kevin in IT having a bad day shouldn’t stop every customer from streaming. This made the news.

Our technology “leadership” are, on average, so incredibly bad at computer stuff.

most software isn’t public-facing at all (neither open source nor closed source), it’s business-internal software (which runs a specific business and implements its business logic), so most of the people who are talking about coding with AI are also talking mainly about this kind of business-internal software.

Does business internal software need to be optimized?

Does business internal software need to be optimized?

Need to be optimised for what? (To optimise is always making trade-offs, reducing some property of the software in pursuit of some optimised ideal; what ideal are you referring to?)

And I’m not clear on how that question is related to the use of LLMs to generate code. Is there a connection you’re drawing between those?

So I was trying to make a statement that the developers of AI for coding may not have the high bar for quality and optimization that closed source developers would have, then was told that the major market was internal business code.

So, I asked, do companies need code that runs quickly on the systems that they are installed on to perform their function. For instance, can an unqualified programmer use AI code to build an internal corporate system rather than have to pay for a more qualified programmer’s time either as an internal hire or producing.

do companies need code that runs quickly on the systems that they are installed on to perform their function.

(Thank you, this indirectly answers one question: the specific optimisation you’re asking about, it seems, is optimised speed of execution when deployed in production. By stating that as the ideal to be optimised, necessarily other properties are secondary and can be worse than optimal.)

Some do pursue that ideal, yes. For example: many businesses seek to deploy their internal applications on hosted environments where they pay not for a machine instance, but for seconds of execution time. By doing this they pay only when the application happens to be running (on a third-party’s managed environment, who will charge them for the service). If they can optimise the run-time of their application for any particular task, they are paying less in hosting costs under such an agreement.

can an unqualified programmer use AI code to build an internal corporate system rather than have to pay for a more qualified programmer’s time either as an internal hire or producing.

This is a question now about paying for the time spent by people to develop and maintain the application, I think? Which is thoroughly different from the time the application spends running a task. Again, I don’t see clearly how “optimise the application for execution speed” is related to this question.

I’m asking if it worth spending more money on human developers to write code that isn’t slop.

Everyone here has been mentioning costs, but they haven’t been comparing them together to see if the cost of using human developers located in a high cost of living American city is worth the benefits.

There are commercial open source stuff too

If humans are so good at coding, how come there are 8100000000 people and only 1500 are able to contribute to the Linux kernel?

I hypothesize that AI has average human coding skills.

The average coder is a junior, due to the explosive growth of the field (similar as in some fast-growing nations the average age is very young). Thus what is average is far below what good code is.

On top of that, good code cannot be automatically identified by algorithms. Some very good codebases might look like bad at a superficial level. For example the code base of LMDB is very diffetent from what common style guidelines suggest, but it is actually a masterpiece which is widely used. And vice versa, it is not difficult to make crappy code look pretty.

“Good code” is not well defined and your example shows this perfectly. LMDBs codebase is absolutely horrendous when your quality criterias for good code are Readability and Maintainability. But it’s a perfect masterpiece if your quality criteria are Performance and Efficiency.

Most modern Software should be written with the first two in mind, but for a DBMS, the latter are way more important.

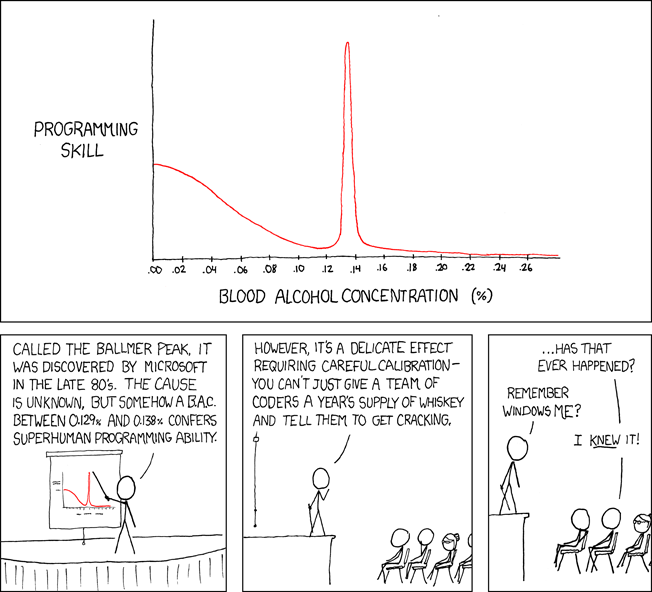

Average drunk human coding skils

A million drunk monkeys on typewriters can write a work of Shakespeare once in a while!

But who wants to pay a 50$ theater ticket in the front seat to see a play written by monkeys?

Well according to microsoft mildly drunk coders work better