I got an email ban.

1609 hours logged 431 solved threads

Well, it is important to comply with the terms of service established by the website. It is highly recommended to familiarize oneself with the legally binding documents of the platform, including the Terms of Service (Section 2.1), User Agreement (Section 4.2), and Community Guidelines (Section 3.1), which explicitly outline the obligations and restrictions imposed upon users. By refraining from engaging in activities explicitly prohibited within these sections, you will be better positioned to maintain compliance with the platform’s rules and regulations and not receive email bans in the future.

Is this a joke?

This is an ironic ChatGPT answer, meant to (rightfully) creep you out.

NGL I read it and laughed at the AI-like response.

Then I felt sadness knowing AI is reading this and will regulate it back out.

Check the post history. Dude just seems like an ass.

Nope, it’s the establishment is cool, elon rocks type.

Nah, but the user is. Their post history is… interesting.

ITT: People unable to recognize a joke

Jokes are supposed to be funny.

Shit like this makes me so glad that I just don’t sign up for these things if I don’t have to.

30 page TOS? You know what, I don’t need to make an account that bad.

If this is true, then we should prepare to be shout at by chatgpt why we didnt knew already that simple error.

ChatGPT now just says “read the docs!” To every question

Hey ChatGPT, how can I …

“Locking as this is a duplicate of [unrelated question]”

You joke.

This would have been probably early last year? Had to look up how to do something in fortran (because fortran) and the answer was very much in the voice of that one dude on the Intel forums who has been answering every single question for decades(?) at this point. Which means it also refused to do anything with features newer than 1992 and was worthless.

Tried again while chatting with an old work buddy a few months back and it looks like they updated to acknowledging f99 and f03 exist. So assume that was all stack overflow.

So they pulled a “reddit”?

These companies don’t realise their most engaged users generate a disproportionate amount of their content.

They will just go to their own spaces.

I think this a good thing in the long run, the internet will become decentralised again.

I don’t know. It feels a bit like “When I quit my employer will realize how much they depended on me.” The realization tends to be on the other side.

But while SO may keep functioning fine it would be great if this caused other places to spring up as well. Reddit and X/Twitter are still there but I’m glad we have the fediverse.

Individuals leaving don’t have an immediate impact but entire groups of people?

People can see how that worked out for Boeing when many of their experienced engineers and quality inspectors left.

Well, reddit is doing fine so far. Shareholders are happy

Take all you want, it will only take a few hallucinations before no one trusts LLMs to write code or give advice

[…]will only take a few hallucinations before no one trusts LLMs to write code or give advice

Because none of us have ever blindly pasted some code we got off google and crossed our fingers ;-)

It’s way easier to figure that out than check ChatGPT hallucinations. There’s usually someone saying why a response in SO is wrong, either in another response or a comment. You can filter most of the garbage right at that point, without having to put it in your codebase and discover that the hard way. You get none of that information with ChatGPT. The data spat out is not equivalent.

That’s an important point, and and it ties into the way ChatGPT and other LLMs take advantage of a flaw in the human brain:

Because it impersonates a human, people are more inherently willing to trust it. To think it’s “smart”. It’s dangerous how people who don’t know any better (and many people that do know better) will defer to it, consciously or unconsciously, as an authority and never second guess it.

And the fact it’s a one on one conversation, no comment sections, no one else looking at the responses to call them out as bullshit, the user just won’t second guess it.

Your thinking is extremely black and white. Many many, probably most actually, second guess chat bot responses.

Think about how dumb the average person is.

Now, think about the fact that half of the population is dumber than that.

Split segment of data without pii to staging database, test pasted script, completely rewrite script over the next three hours.

We should already be at that point. We have already seen LLMs’ potential to inadvertently backdoor your code and to inadvertently help you violate copyright law (I guess we do need to wait to see what the courts rule, but I’ll be rooting for the open-source authors).

If you use LLMs in your professional work, you’re crazy. I would never be comfortably opening myself up to the legal and security liabilities of AI tools.

If you use LLMs in your professional work, you’re crazy

Eh, we use copilot at work and it can be pretty helpful. You should always check and understand any code you commit to any project, so if you just blindly paste flawed code (like with stack overflow,) that’s kind of on you for not understanding what you’re doing.

We already have those near constantly. And we still keep asking queries.

People assume that LLMs need to be ready to replace a principle engineer or a doctor or lawyer with decades of experience.

This is already at the point where we can replace an intern or one of the less good junior engineers. Because anyone who has done code review or has had to do rounds with medical interns know… they are idiots who need people to check their work constantly. An LLM making up some functions because they saw it in stack overflow but never tested is not at all different than a hotshot intern who copied some code from stack overflow and never tested it.

Except one costs a lot less…

This is already at the point where we can replace an intern or one of the less good junior engineers.

This is a bad thing.

Not just because it will put the people you’re talking about out of work in the short term, but because it will prevent the next generation of developers from getting that low-level experience. They’re not “idiots”, they’re inexperienced. They need to get experience. They won’t if they’re replaced by automation.

First a nearly unprecedented world-wide pandemic followed almost immediately by record-breaking layoffs then AI taking over the world, man it is really not a good time to start out as a newer developer. I feel so fortunate that I started working full-time as a developer nearly a decade ago.

Dude the pandemic was amazing for devs, tech companies hiring like mad, really easy to get your foot in the door. Now, between all the layoffs and AI it is hellish

People keep saying this but it’s just wrong.

Maybe I haven’t tried the language you have but it’s pretty damn good at code.

Granted, whatever it puts out needs to be tested and possibly edited but that’s the same thing we had to do with Stack Overflow answers.

I’ve tried a lot of scenarios and languages with various LLMs. The biggest takeaway I have is that AI can get you started on something or help you solve some issues. I’ve generally found that anything beyond a block or two of code becomes useless. The more it generates the more weirdness starts popping up, or it outright hallucinates.

For example, today I used an LLM to help me tighten up an incredibly verbose bit of code. Today was just not my day and I knew there was a cleaner way of doing it, but it just wasn’t coming to me. A quick “make this cleaner: <code>” and I was back to the rest of the code.

This is what LLMs are currently good for. They are just another tool like tab completion or code linting

Maybe for people who have no clue how to work with an LLM. They don’t have to be perfect to still be incredibly valuable, I make use of them all the time and hallucinations aren’t a problem if you use the right tools for the job in the right way.

The last time I saw someone talk about using the right LLM tool for the job, they were describing turning two minutes of writing a simple map/reduce into one minute of reading enough to confirm the generated one worked. I think I’ll pass on that.

At the end of the day, this is just yet another example of how capitalism is an extractive system. Unprotected resources are used not for the benefit of all but to increase and entrench the imbalance of assets. This is why they are so keen on DRM and copyright and why they destroy the environment and social cohesion. The thing is, people want to help each other; not for profit but because we have a natural and healthy imperative to do the most good.

There is a difference between giving someone a present and then them giving it to another person, and giving someone a present and then them selling it. One is kind and helpful and the other is disgusting and produces inequality.

If you’re gonna use something for free then make the product of it free too.

An idea for the fediverse and beyond: maybe we should be setting up instances with copyleft licences for all content posted to them. I actually don’t mind if you wanna use my comments to make an LLM. It could be useful. But give me (and all the other people who contributed to it) the LLM for free, like we gave it to you. And let us use it for our benefit, not just yours.

An idea for the fediverse and beyond: maybe we should be setting up instances with copyleft licences for all content posted to them. I actually don’t mind if you wanna use my comments to make an LLM. It could be useful. But give me (and all the other people who contributed to it) the LLM for free, like we gave it to you. And let us use it for our benefit, not just yours.

This seems like a very fair and reasonable way to deal with the issue.

Letting corporations “disrupt” forums was a mistake.

Eventually, we will need a fediverse version of StackOverflow, Quora, etc.

Those would be harvested to train LLMs even without asking first. 😐

I’d rather the harvesting be open to all than only the company hosting it.

deleted by creator

It’s not quite that simple, though. GDPR is only concerned with personally identifiable information. Answers and comments on SO rarely contain that kind of information as long as you delete the username on them, so it’s not technically against GDPR if you keep the contents.

You could argue that people can be identified by their writing style. I have no idea how far you’d get with that though.

Frankly I don’t see any way whatsoever that this would fly, and that’s a good thing!

Imagine what it would mean for software-development if one angry dev could request the deletion of all their contributions at a moments notice by pointing to a right to be forgotten. Documentation is really not meaningfully different from that.

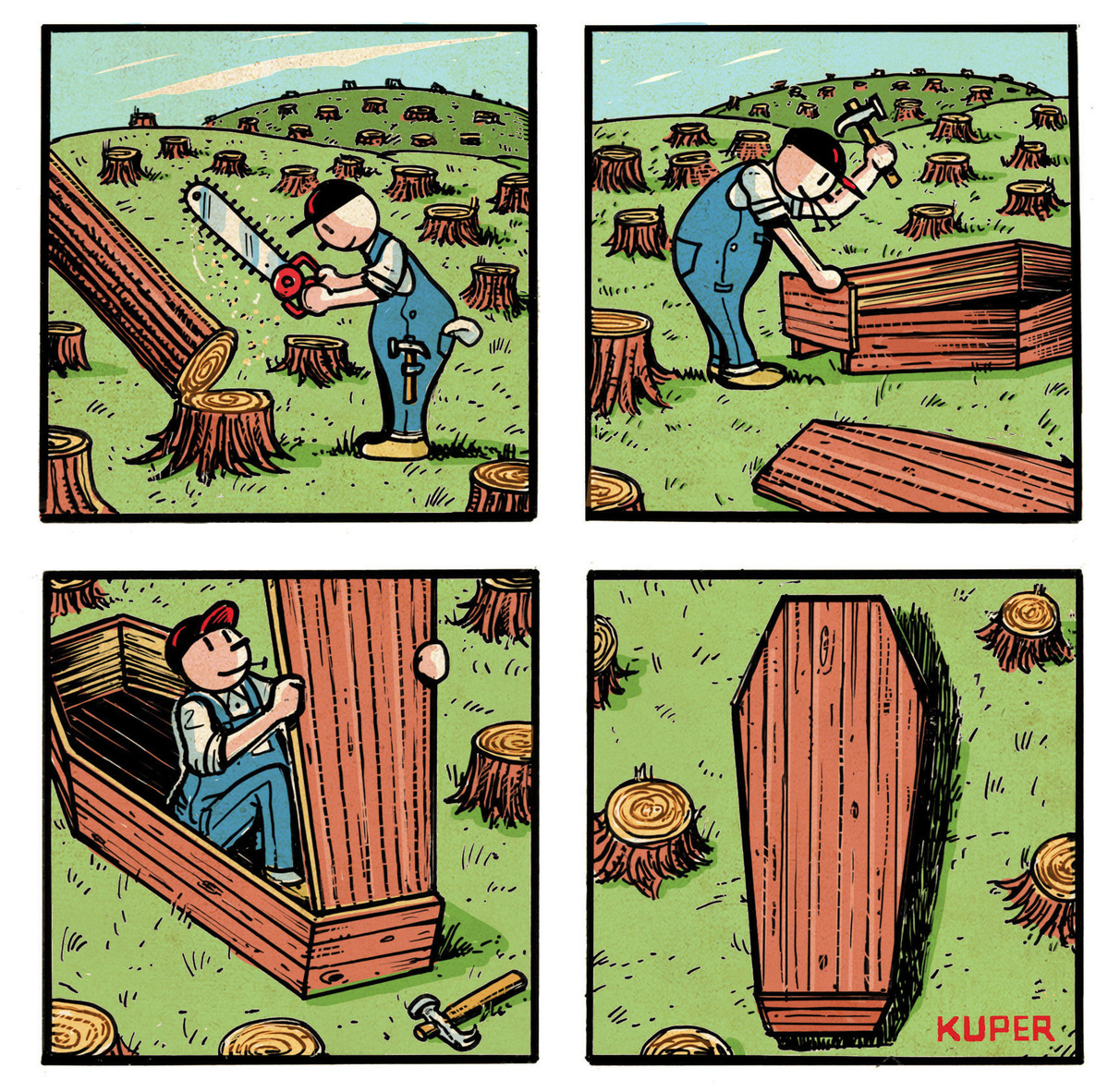

How many trees does a person need to make one coffin…

It’s a metaphor for us killing ourselves in the processes of deforestation, not a story of someone actually making a coffin.

It may not have been a wholly serious question.

You’re not a wholly serious person

You wound me.

Removed by mod

Oh I didn’t consider deleting my answers. Thanks for the good idea

BarbraStackOverflow.I’d be shocked if deleted comments weren’t retained by them

I think the reason for those bans is that they don’t want you rebelling and are showing that they don’t need you personally, thus ban.

Of course it’s all retained.

They have been un-deleting after they ban.

Isn’t that illegal in most countries?

In Europe GDPR gives you the right to have all your data deleted. All you do is send in a request and SO has to remove everything of yours, not just anonymize it. There are some exceptions for legal reasons, eg where financial transactions are involved, but comments should not be exempt.

See, this is why we can’t have nice things. Money fucks it up, every time. Fuck money, it’s a shitty backwards idea. We can do better than this.

Hear me out. Bottle caps.

Nah, I can’t imagine the Fallout that would cause

'Nuff said!

First, they sent the missionaries. They built communities, facilities for the common good, and spoke of collaboration and mutual prosperity. They got so many of us to buy into their belief system as a result.

Then, they sent the conquistadors. They took what we had built under their guidance, and claimed we “weren’t using it” and it was rightfully theirs to begin with.

Reddit/Stack/AI are the latest examples of an economic system where a few people monetize and get wealthy using the output of the very many.

Technofeudalism

It’s very precisely that.

Good to know that stackoverflow will not be a trustable place to find solutuons anymore.

You really don’t need anything near as complex as AI…a simple script could be configured to automatically close the issue as solved with a link to a randomly-selected unrelated issue.